WannaCry About Business Models

WannaCry About Business Models

For the users and administrators of the estimated 200,000 computers affected by “WannaCry” — a number that is expected to rise as new variants come online (the original was killed serendipitously by a security researcher) — the answer to the question implied in the name is “Yes.”

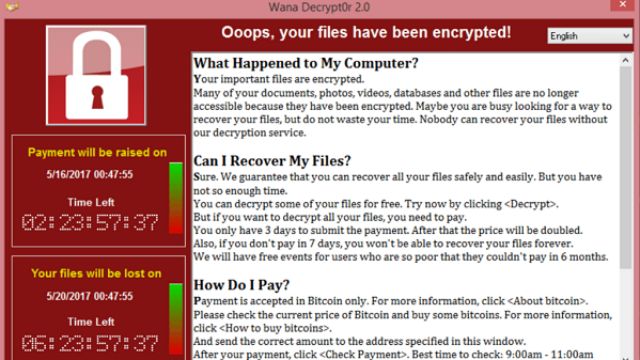

WannaCry is a type of malware called “ransomware”: it encrypts a computers’ files and demands payment to decrypt them. Ransomware is not new; what made WannaCry so destructive was that it was built on top of a computer worm — a type of malware that replicates itself onto other computers on the same network (said network, of course, can include the Internet).

Worms have always been the most destructive type of malware — and the most famous: even non-technical readers may recognize names like Conficker (an estimated $9 billion of damage in 2008), ILOVEYOU (an estimated $15 billion of damage in 2000), or MyDoom (an estimated $38 billion of damage in 2004). There have been many more, but not so many the last few years: the 2000s were the sweet spot when it came to hundreds of millions of computers being online with an operating system — Windows XP — that was horrifically insecure, operated by users given to clicking and paying for scams to make the scary popups go away.

Over the ensuing years Microsoft has, from Windows XP Service Pack 2 on, gotten a lot more serious about security, network administrators have gotten a lot smarter about locking down their networks, and users have at least improved in not clicking on things they shouldn’t. Still, as this last weekend shows, worms remain a threat, and as usual, everyone is looking for someone to blame. This, time, though, there is a juicy new target: the U.S. government.

The WannaCry Timeline

Microsoft President and Chief Legal Officer Brad Smith didn’t mince any words on The Microsoft Blog (“WannaCrypt” is an alternative name for WannaCry”):

Starting first in the United Kingdom and Spain, the malicious “WannaCrypt” software quickly spread globally, blocking customers from their data unless they paid a ransom using Bitcoin. The WannaCrypt exploits used in the attack were drawn from the exploits stolen from the National Security Agency, or NSA, in the United States. That theft was publicly reported earlier this year. A month prior, on March 14, Microsoft had released a security update to patch this vulnerability and protect our customers. While this protected newer Windows systems and computers that had enabled Windows Update to apply this latest update, many computers remained unpatched globally. As a result, hospitals, businesses, governments, and computers at homes were affected.

Smith mentions a number of key dates, but it’s important to get the timeline right, so let me summarize it as best as I understand it: 1

- 2001: The bug in question was first introduced in Windows XP and has hung around in every version of Windows since then

- 2001–2015: At some point the NSA (likely the Equation Group , allegedly a part of the NSA) discovered the bug and built an exploit named EternalBlue, and may or may not have used it

- 2012–2015: An NSA contractor allegedly stole more than 75% of the NSA’s library of hacking tools

- August, 2016: A group called “ShadowBrokers” published hacking tools they claimed were from the NSA; the tools appeared to come from the Equation Group

- October, 2016: The aforementioned NSA contractor was charged with stealing NSA data

- January, 2017: ShadowBrokers put a number of Windows exploits up for sale, including a SMB zero day exploit — likely the “EternalBlue” exploit used in WannaCry — for 250 BTC (around $225,000 at that time)

- March, 2017: Microsoft, without fanfare, patched a number of bugs without giving credit to whomever discovered them; among them was the EternalBlue exploit, and it seems very possible the NSA warned them

- April, 2017: ShadowBrokers released a new batch of exploits , including EternalBlue, perhaps because Microsoft had already patched them (dramatically reducing the value of zero day exploits in particular)

- May, 2017: WannaCry, based on the EternalBlue exploit, was released and spread to around 200,000 computers before its kill switch was inadvertently triggered; new versions have already begun to spread

It is axiomatic to note that the malware authors bear ultimate responsibility for WannaCry; hopefully they will be caught and prosecuted to the full extent of the law.

After that, though, it gets a bit murky.

Spreading Blame

The first thing to observe from this timeline is that, as with all Windows exploits, the initial blame lies with Microsoft. It is Microsoft that developed Windows without a strong security model for networking in particular, and while the company has done a lot of work to fix that, many fundamental flaws still remain.

Not all of those flaws are Microsoft’s fault: the default assumption for personal computers has always been to give applications mostly unfettered access to the entire computer, and all attempts to limit that have been met with howls of protest. iOS created a new model, in which applications were put in a sandbox and limited to carefully defined hooks and extensions into the operating system; that model, though, was only possible because iOS was new. Windows, in contrast, derived all of its market power from the established base of applications already in the market, which meant overly broad permissions couldn’t be removed retroactively without ruining Microsoft’s business model.

Moreover, the reality is that software is hard: bugs are inevitable, particularly in something as complex as an operating system. That is why Microsoft, Apple, and basically any conscientious software developer regularly issues updates and bug fixes; that products can be fixed after the fact is inextricably linked to why they need to be fixed in the first place!

To that end, though, it’s important to note that Microsoft did fix the bug two months ago: any computer that applied the March patch — which, by default, is installed automatically — is protected from WannaCry; Windows XP is an exception, but Microsoft stopped selling that operating system in 2008 2 and stopped supporting it in 2014 (despite that fact, Microsoft did release a Windows XP patch to Fix the bug on Friday night). In other words, end users and the IT organizations that manage their computers bear responsibility as well. Simply staying up-to-date on critical security patches would have kept them safe.

Still, staying up-to-date is expensive, particularly in large organizations, because updates break stuff. That “stuff” might be critical line-of-business software, which may be from 3rd-party vendors, external contractors, or written in-house: that said software is so dependent on one particular version of an OS is itself a problem, so you can blame those developers too. The same goes for hardware and its associated drivers: there are stories from the UK’s National Health Service of MRI and X-ray machines that only run on Windows XP, critical negligence by the manufacturers of those machines.

In short, there is plenty of blame to go around; how much, though, should go into the middle part of that timeline — the government part?

Blame the Government

Smith writes in that blog post:

This attack provides yet another example of why the stockpiling of vulnerabilities by governments is such a problem. This is an emerging pattern in 2017. We have seen vulnerabilities stored by the CIA show up on WikiLeaks, and now this vulnerability stolen from the NSA has affected customers around the world. Repeatedly, exploits in the hands of governments have leaked into the public domain and caused widespread damage. An equivalent scenario with conventional weapons would be the U.S. military having some of its Tomahawk missiles stolen.

This comparison, frankly, is ridiculous, even if you want to stretch and say that the impact of WannaCry on places like hospitals may actually result in physical harm (albeit much less than a weapon of war!).

First, the U.S. government creates Tomahawk missiles, but it is Microsoft that created the bug (even if inadvertently). What the NSA did was discover the bug (and subsequently exploit it), and that difference is critical. Finding bugs is hard work, requiring a lot of money and effort. It’s worth considering why, then, the NSA was willing to do just that, and the answer is right there in the name: national security. And, as we’ve seen through examples like Stuxnet , these exploits can be a powerful weapon.

Here is the fundamental problem: insisting that the NSA hand over exploits immediately is to effectively demand that the NSA not find the bug in the first place. After all, a patched (and thus effectively published) bug isn’t worth nearly as much, both monetarily as ShadowBrokers found out, or militarily, which means the NSA would have no reason to invest the money and effort to find them. To put it another way, the alternative is not that the NSA would have told Microsoft about EternalBlue years ago, but that the underlying bug would have remained un-patched for even longer than it was (perhaps to be discovered by other entities like China or Russia; the NSA is not the only organization searching for bugs).

In fact, the real lesson to be learned with regard to the government is not that the NSA should be Microsoft’s QA team, but rather that leaks happen: that is why, as I argued last year in the context of Apple and the FBI , government efforts to weaken security by fiat or the insertion of golden keys (as opposed to discovering pre-existing exploits) are wrong. Such an approach is much more in line with Smith’s Tomahawk missile argument, and given the indiscriminate and immediate way in which attacks can spread, the country that would lose the most from such an approach would be the one that has the most to lose (i.e. the United States).

Blame the Business Model

Still, even if the U.S. government is less to blame than Smith insists, nearly two decades of dealing with these security disasters suggests there is a systematic failure happening, and I think it comes back to business models. The fatal flaw of software, beyond the various technical and strategic considerations I outlined above, is that for the first several decades of the industry software was sold for an up-front price, whether that be for a package or a license.

This resulted in problematic incentives and poor decision-making by all sides:

- Microsoft is forced to support multiple distinct code bases, which is expensive and difficult and not tied to any monetary incentives (thus, for example, the end of support for Windows XP).

- 3rd-party vendors are inclined to view a particular version of an operating system as a fixed object: after all, Windows 7 is distinct from Windows XP, which means it is possible to specify that only XP is supported. This is compounded by the fact that 3rd-party vendors have no ongoing monetary incentive to update their software; after all, they have already been paid.

- The most problematic impact is on buyers: computers and their associated software are viewed as capital costs, which are paid for once and then depreciated over time as the value of the purchase is realized. In this view ongoing support and security are an additional cost divorced from ongoing value; the only reason to pay is to avoid a future attack, which is impossible to predict both in terms of timing and potential economic harm.

The truth is that software — and thus security — is never finished; it makes no sense, then, that payment is a one-time event.

SaaS to the Rescue

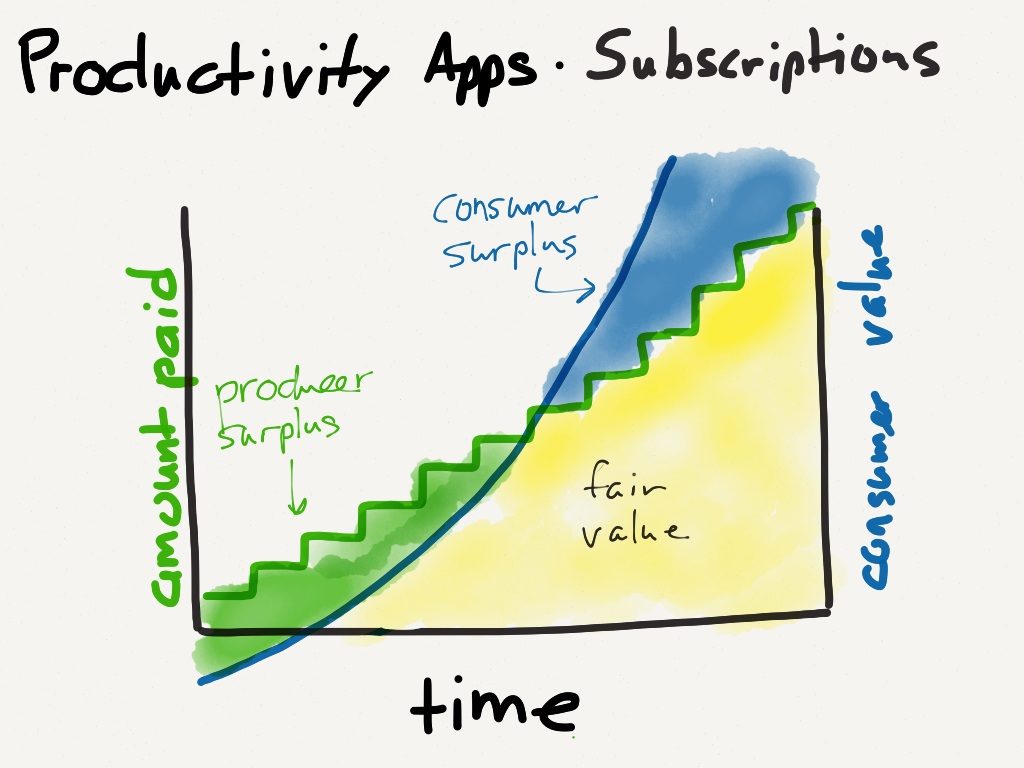

Four years ago I wrote about why subscriptions are better for both developers and end users in the context of Adobe’s move away from packaged software:

That article was about the benefit of better matching Adobe’s revenue with the value gained by its users: the price of entry is lower while the revenue Adobe extracts over time is more commensurate with the value it delivers. And, as I noted, “Adobe is well-incentivised to maintain the app to reduce churn, and users always have the most recent version.”

This is exactly what is necessary for good security: vendors need to keep their applications (or in the case of Microsoft, operating systems) updated, and end users need to always be using the latest version. Moreover, pricing software as a service means it is no longer a capital cost with all of the one-time payment assumptions that go with it: rather, it is an ongoing expense that implicitly includes maintenance, whether that be by the vendor or the end user (or, likely, a combination of the two).

I am, of course, describing Software-as-a-service, and that category’s emergence, along with cloud computing generally (both easier to secure and with massive incentives to be secure), is the single biggest reason to be optimistic that WannaCry is the dying gasp of a bad business model (although it will take a very long time to get out of all the sunk costs and assumptions that fully-depreciated assets are “free”). 3 In the long run, there is little reason for the typical enterprise or government to run any software locally, or store any files on individual devices. Everything should be located in a cloud, both files and apps, accessed through a browser that is continually updated, and paid for with a subscription. This puts the incentives in all the right places: users are paying for security and utility simultaneously, and vendors are motivated to earn it.

To Microsoft’s credit the company has been moving in this direction for a long time: not only is the company focused on Azure and Office 365 for growth, but even its traditional software has long been monetized through subscription-like offerings. Still, implicit in this cloud-centric model is a lot less lock-in and a lot more flexibility in terms of both devices and services: the reality is that for as much of a headache Windows security has been for Microsoft, those headaches are inextricably tied up with the reasons that Microsoft has been one of the most profitable companies of all time.

The big remaining challenge will be hardware: the business model for software-enabled devices will likely continue to be upfront payment, which means no incentives for security; the costs are externalities to be borne by the targets of botnets like Mirai . Expect little progress and lots of blame, the hallmark of the sort of systematic breakdown that results from a mismatched business model.

文章版权归原作者所有。