The Facebook Brand

The Facebook Brand

Last week Reuters reported on the Harris Brand Survey :

Apple Inc and Alphabet Inc’s Google corporate brands dropped in an annual survey while Amazon.com Inc maintained the top spot for the third consecutive year, and electric carmaker Telsa Inc rocketed higher after sending a red Roadster into space.

The headline of the piece was “Apple, Google, see reputation of corporate brands tumble in survey”; one would note that the editors at Reuters apparently disagree with the poll survey respondents about what brands move the needle. But I digress.

So why are Apple and Google lower?

John Gerzema, CEO of the Harris Poll, told Reuters in an interview that the likely reason Apple and Google fell was that they have not introduced as many attention-grabbing products as they did in past years, such as when Google rolled out free offerings like its Google Docs word processor or Google Maps and Apple’s then-CEO Steve Jobs introduced the iPod, iPhone and iPad.

Ah, no Google Docs updates. Got it!

I’m obviously snarking a bit, and it is worth noting that notoriety clearly plays a role in these survey results (look no further than spot 99, where the Harvey Weinstein company makes its debut in the list). What is indisputable, though, is that brand matters — and that includes the regulatory future for Google and Facebook.

YouTube and Wikipedia

Start with Google, specifically YouTube. From The Verge :

YouTube will add information from Wikipedia to videos about popular conspiracy theories to provide alternative viewpoints on controversial subjects, its CEO said today. YouTube CEO Susan Wojcicki said that these text boxes, which the company is calling “information cues,” would begin appearing on conspiracy-related videos within the next couple of weeks…

The information cues that Wojcicki demonstrated appeared directly below the video as a short block of text, with a link to Wikipedia for more information. Wikipedia — a crowdsourced encyclopedia written by volunteers — is an imperfect source of information, one which most college students are still forbidden from citing in their papers. But it generally provides a more neutral, empirical approach to understanding conspiracies than the more sensationalist videos that appear on YouTube.

Your average college student surely knows that the real trick is to use Wikipedia to find the sources that are actually allowed by college professors: they are helpfully linked at the bottom of every article. Indeed, Wikipedia’s citation policy arguably makes it one of the more reliable sources of information out there, at least in terms of conventional wisdom. Moreover, crowd-sourcing facts, at least in theory, seems like a more scalable solution to the sheer amount of video YouTube has to deal with.

It’s also a very Google-y solution: it makes sense that a company with the motto “Organize the world’s information and make it universally accessible and useful” would, confronted with questionable information, seek to remedy it with more information. Not bothering to tell Wikipedia fits as well; Google treats the web as its fiefdom, and for good reason. Search is built on links, the fabric of the web, and is the entry-point for nearly everyone, leading websites everywhere to do Google’s bidding; excluding oneself from search is like going on a hunger strike while fed by robots — one whithers away and no one even notices. Google probably thinks Wikipedia should say “thank-you”!

That noted, it’s hard to see this having any meaningful impact: conspiracy theories and fake news generally tend to appeal primarily to people who already want them to be true; it’s hard to see a Wikipedia link making a big difference. And, of course, there are the conspiracy theories that turn out to be true, or, perhaps more commonly, the conventional wisdom that proves to be wrong.

Facebook and Cambridge Analytica

So which is Cambridge Analytica and Facebook? A year ago the New York Times reported that Cambridge Analytica’s impact on the election of Donald Trump as president was overrated:

Cambridge Analytica’s rise has rattled some of President Trump’s critics and privacy advocates, who warn of a blizzard of high-tech, Facebook-optimized propaganda aimed at the American public, controlled by the people behind the alt-right hub Breitbart News. Cambridge is principally owned by the billionaire Robert Mercer, a Trump backer and investor in Breitbart. Stephen K. Bannon, the former Breitbart chairman who is Mr. Trump’s senior White House counselor, served until last summer as vice president of Cambridge’s board.

But a dozen Republican consultants and former Trump campaign aides, along with current and former Cambridge employees, say the company’s ability to exploit personality profiles — “our secret sauce,” Mr. Nix once called it — is exaggerated. Cambridge executives now concede that the company never used psychographics in the Trump campaign. The technology — prominently featured in the firm’s sales materials and in media reports that cast Cambridge as a master of the dark campaign arts — remains unproved, according to former employees and Republicans familiar with the firm’s work.

Over the weekend the New York Times was out with a new story, entitled How Trump Consultants Exploited the Facebook Data of Millions :

[Cambridge Analytica] harvested private information from the Facebook profiles of more than 50 million users without their permission, according to former Cambridge employees, associates and documents, making it one of the largest data leaks in the social network’s history. The breach allowed the company to exploit the private social media activity of a huge swath of the American electorate, developing techniques that underpinned its work on President Trump’s campaign in 2016.

Facebook executives — on Twitter, naturally — took exception to the use of the word “breach”:

This was unequivocally not a data breach. People chose to share their data with third party apps and if those third party apps did not follow the data agreements with us/users it is a violation. no systems were infiltrated, no passwords or information were stolen or hacked.

— Boz (@boztank) March 17, 2018

Everything was working as intended, thanks to the Graph API.

Facebook versus Google and the Graph API

Facebook introduced what it called the “Open Graph” back in 2010; CEO Mark Zuckerberg led off Facebook’s f8 developer conference thusly:

We think that what we have to show you today will be the most transformative thing we’ve ever done for the web. There are a few key themes that we are going to be talking about today. The first is the Open Graph that we’re all building together. Today, the web exists mostly as a series of unstructured links between pages, and this has been a powerful model, but it’s really just the start. The Open Graph puts people at the center of the web. It means the web can become a set of personally and semantically meaningful connections between people and things. I am FRIENDS with you. I am ATTENDING this event. I LIKE this band. These connections aren’t just happening on Facebook, they’re happening all over the web, and today, with the Open Graph, we’re going to bring all of these together.

The reference to “unstructured links” was clearly about Google, and while it’s easy to think of the two companies as a duopoly astride the web, Facebook was at the time a much smaller entity than it is today: 400 million users, still private, and a tiny advertising business relative to Google.

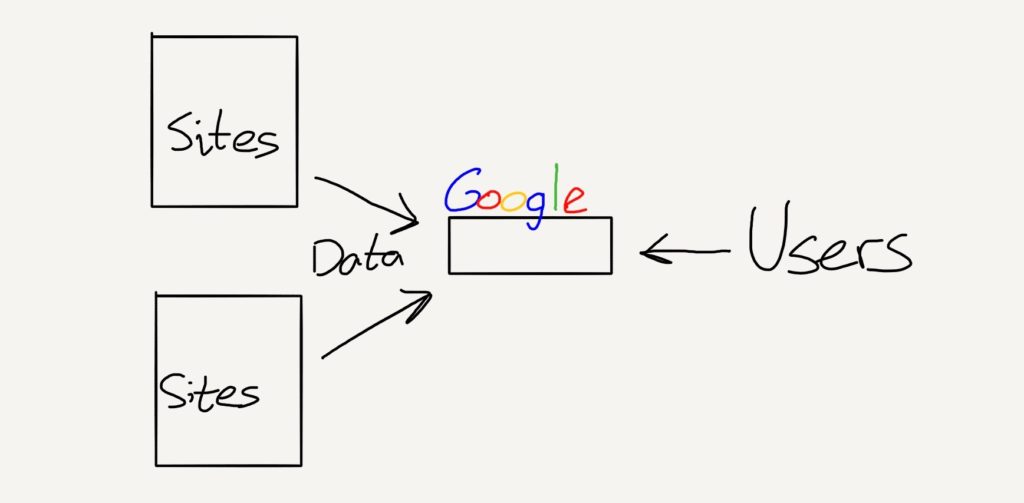

The challenge from Facebook’s perspective is the one I outlined above: Google got data from everywhere on the web because sites and applications were heavily incentivized to give it to Google so as to have a better chance of reaching end users aggregated by Google:

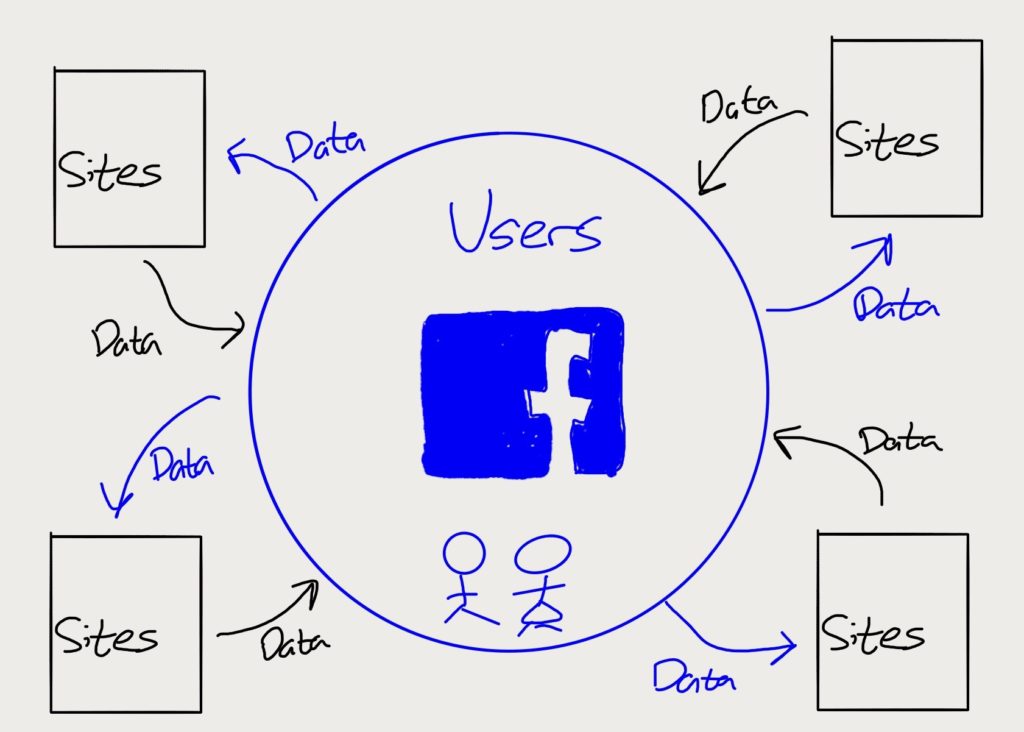

Facebook, meanwhile, was a closed garden. This was an advantage in that users generated Facebook’s content for them, and that said content wasn’t available to Google, but there was no obvious way for Facebook to gather data on the greater web, which is where the Open Graph came in; Facebook would give away slices of its data in exchange for data from sites and apps around the web:

Zuckerberg said as much in his keynote:

At our first F8, I introduced the concept of the Social Graph. The idea that if you mapped out all of the connections between people and things in the world it would form this massive interconnected graph that just shows how everyone is connected together. Now Facebook is actually only mapping out a part of this graph, mostly the part around people and the relationships that they have. You guys [developers] are mapping out other really important of the graph. For example, I know Yelp is here today. Yelp is mapping out the part of the graph that relates to small businesses. Pandora is mapping out the part of the graph that relates to music. And a lot of news sites are mapping out the part of the graph that relates to current events and news content. If we can take these separate maps of the graph and pull them all together, then we can create a web that is more social, personalized, smarter, and semantically aware. That’s what we’re going to focus on today.

What followed was the introduction of the Graph API, which was the means by which Facebook would facilitate the data exchange, and as you can see on an old Facebook developer page , Facebook was willing to give away just about everything:

Moreover, note that users could give away everything about their friends as well; this is exactly how the researcher implicated in the Cambridge Analytica story leveraged 270,000 survey respondents to gain access to the data of 50 million Facebook users.

Facebook finally shut down the friend-sharing functionality five years later , after it was clearly ensconced with Google atop the digital advertising world, of course.

Facebook’s Brand

That Facebook pursued such a strategy is even less of a surprise than Google’s imperious adoption of Wikipedia as conspiracy theory debunker: Facebook’s motto was “Making the world more open and connected”, and the company has repeatedly demonstrated a willingness to do just that , whether users like it or not. That’s the thing with branding: what people think about your company is not so much what you say but what you do, and that many people immediately assume the worst about Facebook and privacy is Facebook’s own fault.

To be sure, there seems to be a partisan angle as well — one didn’t see many complaints about the Obama campaign. From the Washington Post :

Early in 2011, some Obama operatives visited Facebook, where executives were encouraging them to spend some of the campaign’s advertising money with the company. “We started saying, ‘Okay, that’s nice if we just advertise,’ ” Messina said. “But what if we could build a piece of software that tracked all this and allowed you to match your friends on Facebook with our lists, and we said to you, ‘Okay, so-and-so is a friend of yours, we think he’s unregistered, why don’t you go get him to register?’ Or ‘So-and-so is a friend of yours, we think he’s undecided. Why don’t you get him to be decided?’ And we only gave you a discrete number of friends. That turned out to be millions of dollars and a year of our lives. It was incredibly complex to do.”

But this third piece of the puzzle provided the campaign with another treasure trove of information and an organizing tool unlike anything available in the past. It took months and months to solve, but it was a huge breakthrough. If a person signed on to Dashboard through his or her Facebook account, the campaign could, with permission, gain access to that person’s Facebook friends. The Obama team called this “targeted sharing.” It knew from other research that people who pay less attention to politics are more likely to listen to a message from a friend than from someone in the campaign. The team could supply people with information about their friends based on data it had independently gathered. The campaign knew who was and who wasn’t registered to vote. It knew who had a low propensity to vote. It knew who was solid for Obama and who needed more persuasion — and a gentle or not-so-gentle nudge to vote. Instead of asking someone to send a message to all of his or her Facebook friends, the campaign could present a handpicked list of the three or four or five people it believed would most benefit from personal encouragement.

This, though, is hardly a defense for Facebook: what is the company going to say, that it was exporting friend data for everyone, not just Trump? To be sure, buying the data from an academic and allegedly holding onto it violated Facebook’s Terms of Service, but “We have terms of service!” isn’t exactly a powerful branding campaign, especially given that at that same 2010 f8 Facebook had dramatically loosened those terms of service:

We’ve had this policy where you can’t store or cache data for any longer than 24 hours, and we’re going to go ahead and get rid of that policy.

(Cheering)

So now, if a person comes to your site, and a person gives you permission to access their information, you can store it. No more having to make the same API calls day-after-day. No more needing to build different code paths just to handle information that Facebook users are sharing with you. We think that this step is going to make building with Facebook platform a lot simpler.

Indeed it was.

Google, Facebook, and Regulation

Ultimately, the difference in Google and Facebook’s approaches to the web — and in the case of the latter, to user data — suggest how the duopolists will ultimately be regulated. Google is already facing significant antitrust challenges in the E.U., which is exactly what you would expect from a company in a dominant position in a value chain able to dictate terms to its suppliers. Facebook, meanwhile, has always seemed more immune to antitrust enforcement: its users are its suppliers, so what is there to regulate?

That, though, is the answer: user data. It seems far more likely that Facebook will be directly regulated than Google; arguably this is already the case in Europe with the GDPR. What is worth noting, though, is that regulations like the GDPR entrench incumbents : protecting users from Facebook will, in all likelihood, lock in Facebook’s competitive position.

This episode is a perfect example: an unintended casualty of this weekend’s firestorm is the idea of data portability: I have argued that social networks like Facebook should make it trivial to export your network; it seems far more likely that most social networks will respond to this Cambridge Analytica scandal by locking down data even further. That may be good for privacy, but it’s not so good for competition. Everything is a trade-off.

I wrote a follow-up to this article in this Daily Update .

文章版权归原作者所有。