Apple’s Shifting Differentiation

Apple’s Shifting Differentiation ——

If you ask Apple — or watch their seemingly never-ending series of events — they will happily tell you exactly what the company’s differentiation is based on; from this year alone:

This integration is at the core of Apple’s incredibly successful business model: the company makes the majority of its money by selling hardware, but while other manufacturers can, at least in theory, create similar hardware, which should lead to commoditization, only Apple’s hardware runs its proprietary operating systems.

Of course software is even more commoditizable than hardware: once written, software can be duplicated endlessly, which means its marginal cost of production is zero. This is why many software-based companies are focused on serving as large of a market as possible, the better to leverage their investments in creating the software in the first place. However, zero marginal cost is not the only inherent quality of software: it is also infinitely customizable, which means that Apple can create something truly unique, and by tying said software to its hardware, make its hardware equally unique as well, allowing it to charge a sustainable premium.

This is, to be sure, a simplistic view of Apple: many aspects of its software are commoditized, often to Apple’s benefit, while many aspects of its hardware are differentiated. What is fascinating is that while modern Apple is indeed characterized by the integration of hardware and software, the balance of which differentiates the other has shifted over time, culminating in yesterday’s announcement of new Macs powered by Apple Silicon.

Apple 1.0: Software Over Hardware

When Steve Jobs returned to Apple in 1996, the company was famously in terrible financial shape; unsurprisingly the company’s computer lineup was in terrible shape as well: too many models that were too unremarkable. The only difference from PCs was that Macs had a different operating system that was technically obsolete, PowerPC processors that were falling behind x86, and also they were more expensive. Not exactly a winning combination!

Jobs made a number of changes in short order: he killed off the Macintosh clone market, re-asserting Apple’s integrated business model; he dramatically simplified the product lineup; and, having found a promising young designer already working at Apple named Jony Ive, he put all of the company’s efforts behind the iMac. This was truly a product where the hardware carried the software; the iMac was a cultural phenomenon, not because of Classic Mac OS’s ease-of-use, and certainly not because of its lack of memory protection, but simply because the hardware was so simple and so adorable.

OS X brought software to the forefront, delivering not simply a technically sound operating system, but one that was based on Unix, making it particularly attractive to developers. And, on the consumer side, Apple released iLife, a suite of applications that made a Mac useful for normal users. I myself bought my first Mac in this era because I wanted to use GarageBand; 16 years on and my musical ambitions are abandoned, but my Mac usage remains.

By that point I was buying a Mac despite its hardware: while my iBook was attractive enough, its processor was a Motorola G4 that was not remotely competitive with Intel’s x86 processors; later that year Jobs made the then-shocking-but-in-retrospect-obvious decision to shift Macs to Intel processors. In this case having the same hardware as everyone else in the industry would be a big win for Apple, the better to let their burgeoning software differentiation shine.

Apple 2.0: The Apex of Integration

Meanwhile, Apple had an exploding hit on its hands with the iPod, which combined beautiful hardware and superior storage capacity with iTunes, software that offloaded the complexity of managing your music to your far more capable Mac and, starting in 2003, your PC; notably Apple avoided the trap of integrating hardware (the iPod) with hardware (the Mac), which would have handicapped the former to prop up the latter. Instead the company took advantage of the flexibility of software to port iTunes to Windows.

The iPhone followed the path blazed by the iPod: while the first few versions of the iPhone were remarkably limited in their user-facing software capabilities, that was acceptable because much of that complexity was offloaded to the PC or Mac you plugged it into. To that point much of the software work had gone into making the iPhone usable on hardware that was barely good enough; RIM famously thought Jobs was lying about the iPhone’s capabilities at launch.

Over time the iPhone would gradually wean itself off of iTunes and the need to sync with a PC or Mac, making itself a standalone computer in its own right; it was also on its way to being the most valuable product in history. This was the ultimate in integration, both in terms of how the product functioned, and also in the business model that integration unlocked.

Apple 3.0: Hardware Over Software

Sixteen years on from the PowerPC-to-Intel transition, and Apple’s software differentiation is the smallest it has been since the dawn of OS X. Windows has a Subsystem for Linux, which, combined with the company’s laser focus on developers, makes Microsoft products increasingly attractive for software development. Meanwhile, most customers use web apps on their computers, PC or Mac. There has been an explosion in creativity, but that explosion has occurred on smartphones, and is centered around distribution channels, not one’s personal photo or movie library.

Those distribution channels and the various apps customers use to create and consume are available on both leading platforms, iOS and Android. I personally feel that the iPhone retains an advantage in the smoothness of its interface and quality of its apps, but Android is more flexible and well-suited to power users, and much better integrated with Google’s superior web services; there are strong arguments to be made for both ecosystems.

Where the iPhone is truly differentiated is in hardware: Apple has — for now — the best camera system, and has had for years the best system-on-a-chip. These two differentiators are related: smartphone cameras are not simply about lenses and sensors, but also about how the resultant image is processed; that involves both software and the processor, and what is notable about smartphone cameras is that Google’s photo-processing software is generally thought to be superior. What makes the iPhone a better camera, though, is its chip.

Apple Silicon and Sketch

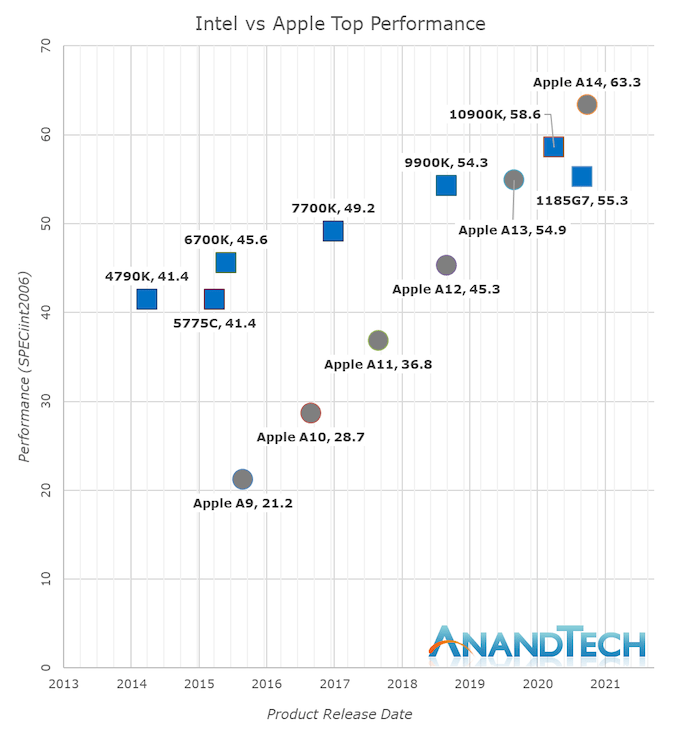

It is difficult to overstate just how far ahead Apple’s A-series of smartphone chips is relative to the competition; AnandTech found that the A14 delivered nearly double the performance of its closest competitors for the same amount of power — indeed, the A14’s only true competitor was last year’s A13. At least, that is, as far as mobile is concerned; the most noteworthy graph from that AnandTech article is about how the A14 stacks up against those same Intel chips that power Macs:

Whilst in the past 5 years Intel has managed to increase their best single-thread performance by about 28%, Apple has managed to improve their designs by 198%, or 2.98x (let’s call it 3x) the performance of the Apple A9 of late 2015.

Apple’s performance trajectory and unquestioned execution over these years is what has made Apple Silicon a reality today. Anybody looking at the absurdness of that graph will realise that there simply was no other choice but for Apple to ditch Intel and x86 in favour of their own in-house microarchitecture – staying par for the course would have meant stagnation and worse consumer products.

Today’s announcements only covered Apple’s laptop-class Apple Silicon, whilst we don’t know the details at time of writing as to what Apple will be presenting, Apple’s enormous power efficiency advantage means that the new chip will be able to offer either vastly increased battery life, and/or, vastly increased performance, compared to the current Intel MacBook line-up.

What makes the timing of this move ideal from Apple’s perspective is not simply that this is the year that the A-series of chips are surpassing Intel’s, but also the Mac’s slipping software differentiation. Sketch, makers of the eponymous vector graphics app, wrote, on the occasion of their 10th anniversary, a paean to Mac apps:

Ten years after the first release of Sketch, a lot has changed. The design tools space has grown. Our amazing community has, too. Even macOS itself has evolved. But one thing has remained the same: our love for developing a truly native Mac app. Native apps bring so many benefits — from personalization and performance to familiarity and flexibility. And while we’re always working hard to make Cloud an amazing space to collaborate, we still believe the Mac is the perfect place to let your ideas and imagination flourish.

The fly in Sketch’s celebratory ointment is that phrase “even macOS itself has evolved”; the truth is that most of the macOS changes over Sketch’s lifetime — which started with Snow Leopard, regarded by many (including yours truly) as the best version of OS X — have been at best cosmetic, at worst clumsy attempts to protect novice users that often got in the way of power users.

Meanwhile, it is the cloud that is the real problem facing Sketch: Figma, which is built from the ground-up as a collaborative web app, is taking the design world by storm, because rock-solid collaboration with good enough web apps is more important for teams than tacked-on collaboration with native software built for the platform.

Sketch, to be sure, bears the most responsibility for its struggles; frankly, that native app piece reads like a refusal to face its fate. Apple, though, shares a lot of the blame: imagine if instead of effectively forcing Sketch out of the App Store with its zealous approach to security, Apple had evolved AppKit, macOS’s framework for building applications, to provide built-in support for collaboration and live-editing.

Instead the future is web apps, with all of the performance hurdles they entail, which is why, from Apple’s perspective, the A-series is arriving just in time. Figma in Electron may destroy your battery, but that destruction will take twice as long, if not more, with an A-series chip inside!

Integration Wins Again

This isn’t the first time I have noted that Apple is inclined to fix an ecosystem problem with hardware; five years ago, after the launch of the iPad Pro, I wrote in From Products to Platforms:

Note that phrase: “How could we take the iPad even further?” Cook’s assumption is that the iPad problem is Apple’s problem, and given that Apple is a company that makes hardware products, Cook’s solution is, well, a new product.

My contention, though, is that when it comes to the iPad Apple’s product development hammer is not enough. Cook described the iPad as “A simple multi-touch piece of glass that instantly transforms into virtually anything that you want it to be”; the transformation of glass is what happens when you open an app. One moment your iPad is a music studio, the next a canvas, the next a spreadsheet, the next a game. The vast majority of these apps, though, are made by 3rd-party developers, which means, by extension, 3rd-party developers are even more important to the success of the iPad than Apple is: Apple provides the glass, developers provide the experience.

The iPad has since recovered from its 2017 nadir in sales, but seems locked in at around 8% of Apple’s revenue, a far cry from the 20% share it had in its first year, when it looked set to rival the iPhone; I remain convinced that the lack of a thriving productivity software market that treated the iPad like the unique device Jobs thought it was, instead of a laptop replacement, is the biggest reason why.

Perhaps Apple Silicon in Macs will turn out better: it is possible that Apple’s chip team is so far ahead of the competition, not just in 2020, but particularly as it develops even more powerful versions of Apple Silicon, that the commoditization of software inherent in web apps will work to Apple’s favor, just as the its move to Intel commoditized hardware, highlighting Apple’s then-software advantage in the 00s.

Apple is pricing these new Macs as if that is the case: the M1 probably costs around $75 (an educated guess), which is less than the Intel chips it replaces, but Apple is mostly holding the line on prices (the new Mac Mini is $100 cheaper, but also has significantly less I/O). That suggests the company believes it can both take share and margin, and it’s a reasonable bet from my perspective. The company has the best chips in the world, and you have to buy the entire integrated widget to get them.

文章版权归原作者所有。