Moderation in Infrastructure

Moderation in Infrastructure ——

It was Patrick Collison, Stripe’s CEO, who pointed out to me that one of the animating principles of early 20th-century Progressivism was guaranteeing freedom of expression from corporations:

If you go back to 1900, part of the fear of the Progressive Era was that privately owned infrastructure wouldn’t be sufficiently neutral and that this could pose problems for society. These fears led to lots of common carrier and public utilities law covering various industries — railways, the telegraph, etc. In a speech given 99 years ago next week, Bertrand Russell said that various economic impediments to the exercise of free speech were a bigger obstacle than legal penalties.

Russell’s speech, entitled Free Thought and Official Propaganda, acknowledges “the most obvious” point that laws against certain opinions were an obvious imposition on freedom, but says of the power of big companies:

Exactly the same kind of restraints upon freedom of thought are bound to occur in every country where economic organization has been carried to the point of practical monopoly. Therefore the safeguarding of liberty in the world which is growing up is far more difficult than it was in the nineteenth century, when free competition was still a reality. Whoever cares about the freedom of the mind must face this situation fully and frankly, realizing the inapplicability of methods which answered well enough while industrialism was in its infancy.

It’s fascinating to look back on this speech now. On one hand, the sort of beliefs that Russell was standing up for — “dissents from Christianity, or belie[f]s in a relaxation of marriage laws, or object[ion]s to the power of great corporations” — are freely shared online or elsewhere; if anything, those Russell objected to are more likely today to insist on their oppression by the powers that be. What is certainly true is that those powers, at least in terms of social media, feel more centralized than ever.

This power came to the fore in early January 2021, when first Facebook, and then Twitter, suspended/banned the sitting President of the United States from their respective platforms. It was a decision I argued for; from Trump and Twitter:

My highest priority, even beyond respect for democracy, is the inviolability of liberalism, because it is the foundation of said democracy. That includes the right for private individuals and companies to think and act for themselves, particularly when they believe they have a moral responsibility to do so, and the belief that no one else will. Yes, respecting democracy is a reason to not act over policy disagreements, no matter how horrible those policies may be, but preserving democracy is, by definition, even higher on the priority stack.

This is, to be sure, a very American sort of priority stack; political leaders across the world objected to Twitter’s actions, not because they were opposed to moderation, but because it was unaccountable tech executives making the decision instead of government officials:

Merkel sees the #Twitter ban of Donald Trump as "problematic". Freedom of speech can be restricted only by the legislator, not by the management a private company.

— Guy Chazan (@GuyChazan) January 11, 2021

</div>

I do suspect that tech company actions will have international repercussions for years to come, but for the record, there is reason to be concerned from an American perspective as well: you can argue, as I did, that Facebook and Twitter have the right to police their platform, and, given their viral nature, even an obligation. The balance to that power, though, should be the openness of the Internet, which means the infrastructure companies that undergird the Internet have very different responsibilities and obligations.

A Framework for Moderation

I have made the case in A Framework for Moderation that moderation decisions should be based on where you are in the stack; with regards to Facebook and Twitter:

At the top of the stack are the service providers that people publish to directly; this includes Facebook, YouTube, Reddit, and other social networks. These platforms have absolute discretion in their moderation policies, and rightly so. First, because of Section 230, they can moderate anything they want. Second, none of these platforms have a monopoly on online expression; someone who is banned from Facebook can publish on Twitter, or set up their own website. Third, these platforms, particularly those with algorithmic timelines or recommendation engines, have an obligation to moderate more aggressively because they are not simply distributors but also amplifiers.

This is where much of the debate on moderation has centered; it is also not what this Article is about;

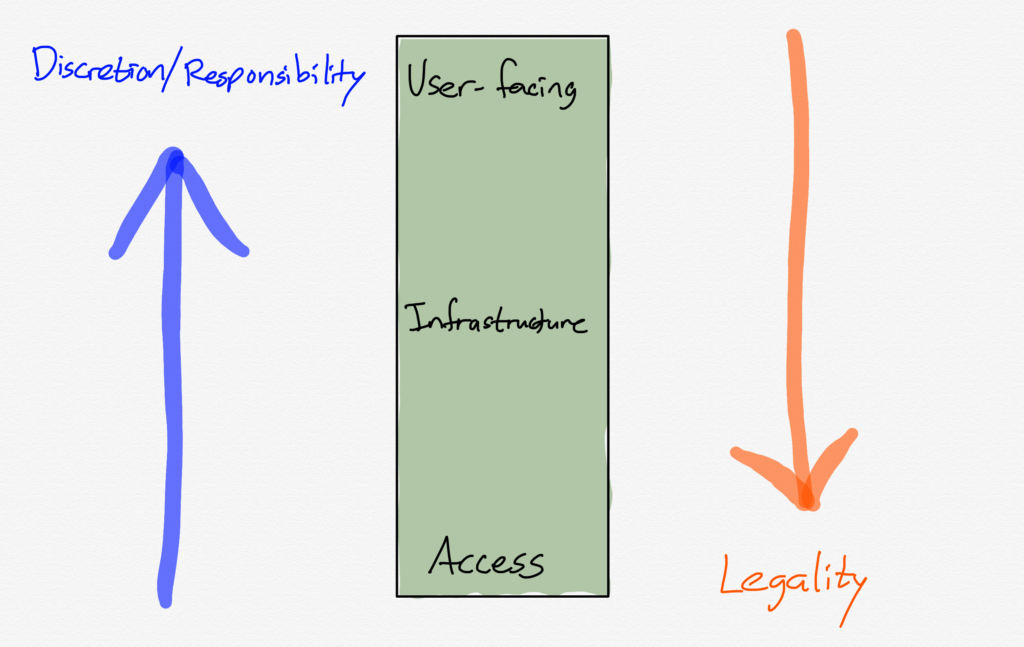

It makes sense to think about these positions of the stack very differently: the top of the stack is about broadcasting — reaching as many people as possible — and while you may have the right to say anything you want, there is no right to be heard. Internet service providers, though, are about access — having the opportunity to speak or hear in the first place. In other words, the further down the stack, the more legality should be the sole criteria for moderation; the further up, the more discretion and even responsibility there should be for content:

Note the implications for Facebook and YouTube in particular: their moderation decisions should not be viewed in the context of free speech, but rather as discretionary decisions made by managers seeking to attract the broadest customer base; the appropriate regulatory response, if one is appropriate, should be to push for more competition so that those dissatisfied with Facebook or Google’s moderation policies can go elsewhere.

The problem is that I skipped the part between broadcasting and access; today’s Article is about the big piece in the middle: infrastructure. As that simple illustration suggests, there is more room for action than the access layer, but more reticence is in order relative to broadcast platforms. To figure out how infrastructure companies should think about moderation, I talked to four CEOs at various layers of infrastructure:

- Patrick Collison, the CEO of Stripe, which provides payment services to both individual companies and to platforms. link to interview

- Brad Smith, the President of Microsoft, which owns and operates Azure, on which a host of websites, apps, and services are run. link to interview

- Thomas Kurian, the CEO of Google Cloud, on which a host of websites, apps, and services are run. link to interview

- Matthew Prince, the CEO of Cloudflare, which offers networking services, including free self-serve DDoS protection, without which many websites, apps, and services, particularly those not on the big public clouds, could not effectively operate. link to interview

What I found compelling about these interviews was the commonality in responses; to that end, instead of my making pronouncements on how infrastructure companies should think about issues of moderation, I thought it might be helpful to let these executives make their own case.

The Line in the Stack

The first overarching principle was very much in line with the argument I laid out above: infrastructure is very different from user-facing applications, and should be approached differently.

Microsoft’s Brad Smith:

The industry is looking at the stack and almost putting it in two layers. At the top half of the stack are services that basically tend to meet three criteria or close to it. One is it is a service that has control over the postings or removal of individual pieces of content. The second is that the service is publicly facing, and the third is that the content itself has a greater proclivity to go viral. Especially when all three or even when say two of those three criteria are met, I think that there is an expectation, especially in certain content categories like violent extremism or child exploitative images and the like that the service itself has a responsibility to be reviewing individual postings in some appropriate way.

Cloudflare’s Matthew Prince:

I think that so long as each of the different layers above you are complying with law and doing the right thing and cooperating with law enforcement…it should be an extremely high bar for somebody that sits as low in the stack as we do…I think that that’s different than if you’re Facebook firing an individual user, or even if you’re a hosting provider firing an individual platform. The different layers of the stack, I think do have different levels of responsibility and that’s not to say we don’t have any responsibility, it’s just that we have to be very thoughtful and careful about it, I think more so than what you have to do as you move up further in the stack.

Stripe’s Patrick Collison:

We’re different from others. We’re financial services infrastructure, not a content platform. I’m not sure that the kind of neutrality that companies like Stripe should uphold is necessarily best for Twitter, YouTube, Facebook, etc. However, I do think that in some of the collective reckoning with the effects of social media, the debate sometimes underrates the importance of neutrality at the infrastructure level.

Your Layer = Your Responsibility

The idea of trusting and empowering platforms built on infrastructure to take care of their layer of the stack was a universal one. Collison made the case that to try and police the entire stack was unworkable in a free society (and that this explained why Stripe kicked the Trump campaign off after January 6th, but still supported the campaign indirectly):

This gets into platform governance, which is one of the most important dimensions of all of this, I think. We suspended the campaign accounts that directly used Stripe — the accounts where we’re the top-of-the-stack infrastructure. We didn’t suspend all fundraising conducted by other platforms that benefitted his campaign. We expect platforms that are built on Stripe to implement their own moderation and governance policies and we think that they should have the latitude to do so. This idea of paying attention to your position in the stack is obviously something you’ve written about before and I think things just have to work this way. Otherwise, we’re ultimately all at the mercy of the content policies of our DNS providers, or something like that.

Google’s Thomas Kurian noted there were practical considerations as well:

Imagine somebody wrote a multi-tenant software as a service application on top of GCP, and they’re offering it, and one of the tenants in that application is doing something that violates the ToS but others are not. It wouldn’t be appropriate or even legally possible for us to shut off the entire SaaS application because you can’t just say I’m going to throttle the IP addresses or the inbound traffic, because there’s no way that the tenant is below that level of granularity. In some cases it’s not even technically feasible, and so rather than do that, our model is to tell the customer, who we have a direct relationship with, “Hey, you signed an agreement that agreed to comply with our ToS and AUP [Acceptable Use Policy], but now we have a report of a potential violation of that and it’s your responsibility to pass those obligations on to your end customer.”

Still, Smith didn’t completely absolve infrastructure companies of responsibility:

The platform underneath doesn’t tend to meet those three criteria, but that doesn’t absolve the platform of all responsibility to be thinking about these issues. The test for, at the platform level, is therefore whether the service as a whole has a reasonable infrastructure and is making reasonable efforts to fulfill its responsibilities with respect to individualized content issues. So whether you’re a GCP or AWS or Azure or some other service, what people increasingly expect you to do is not make one-off decisions on one-off postings, but asking whether the service has a level of content moderation in place to be responsible. And if you’re a gab.ai and you say to Azure, “We don’t and we won’t,” then as Azure, we would say, “Well look, then we are not really comfortable as being the hosting service for you.”

Proactive Process

This middle way — give responsibility to the companies and services on top of your infrastructure, but if they fail, have a predictable process in place to move them off of the platform — requires a proactive approach. Smith again:

Typically what we try to do is identify these issues or issues early on. If we don’t think there’s a natural match, if we’re not comfortable with somebody, it makes more sense to let them know before they get on our service so that they can know that and they can find their own means of production if that’s what they want. If we conclude that somebody is reliant on us for their means of production, and we’re no longer comfortable with them, we should try to manage it through a conversation so they can find a means of production that is an alternative if that’s what they choose. But ideally, you don’t want to call them up at noon and tell them they have two hours before they’re no longer on the internet.

Kurian made the same argument against arbitrary decision-making and in favor of proactive process:

We evolve our Acceptable Use Policies on a periodic basis. Remember, we need to evolve it in a thoughtful way, not react to individual crisis. Secondly, we also need to evolve it in a way with a certain frequency, otherwise customers stop trusting the platform. They’d be like “Today, I thought I was accepted and tomorrow you changed it, and now I’m no longer accepted and I have to migrate off the platform”. So we have a fairly well thought out cadence, typically once every six months to once every twelve months, when we reevaluate that based on what we see…

We try to be as prescriptive as possible so that people have as much clarity as possible with what can they do and what they can’t do. Secondly, when we run into something that is a new circumstance, because the boundary of these things continue to move, if it’s a violation of what is considered a legally acceptable standard, we will take action much more quickly. If it’s not a direct violation of law but more debatable on should you take action or not, as an infrastructure provider, our default is don’t take action, but we will then work through a process to update our AUP if we think it’s a violation, and then we make that available through that.

What About Parler?

This makes sense as I write this on March 16, 2021, while the world is relatively calm; the challenge is holding to a commitment to this default when the heat is on, like it was in January. Prince noted:

We are a company that operates, we have equipment running in over 100 countries around the world, we have to comply with the laws in all those countries, but more than that, we have to comply with the norms in all of those countries. What I’ve tried to figure out is, what’s the touchstone that gets us away from freedom of expression and gets us to something which is more universal and more fundamental around the world and I keep coming back to what in the US we call due process, but around the rest of the world, they’d call it, rule of law. I think the interesting thing about the events of January were you had all these tech companies start controlling who is online and it was actually Europe that came out and said, “Whoa, Whoa, Whoa. That makes us super uncomfortable.” I’m kind of with Europe.

Collison made a similar argument about AWS’s decision to stop serving Parler:

Parler was a good case study. We didn’t revoke Parler’s access to Stripe. They’re a platform themselves and it certainly wasn’t clear to us in the moment that Parler should be held responsible for the events. (I’m not making a final assessment of their culpability — just saying that it was impossible for anyone to know immediately.) I don’t want to second guess anyone else’s decisions — we’re doing this interview because these questions are hard! — but I think it’s very important that infrastructure players are willing to delegate some degree of moderation authority to others higher in the stack. If you don’t, you get these problematic choke points…These sudden, unpredictable flocking events can create very intense pressure and I think responding in the moment is usually not a recipe for good decision making.

I was unable to speak with anyone from AWS; the company said in a court filing that it had given the social networking service multiple warnings that it needed more stringent moderation from November 2020 on. AWS said in its filing:

This case is not about suppressing speech or stifling viewpoints. It is not about a conspiracy to restrain trade. Instead, this case is about Parler’s demonstrated unwillingness and inability to remove from the servers of Amazon Web Services (“AWS”) content that threatens the public safety, such as by inciting and planning the rape, torture, and assassination of named public officials and private citizens. There is no legal basis in AWS’s customer agreements or otherwise to compel AWS to host content of this nature. AWS notified Parler repeatedly that its content violated the parties’ agreement, requested removal, and reviewed Parler’s plan to address the problem, only to determine that Parler was both unwilling and unable to do so. AWS suspended Parler’s account as a last resort to prevent further access to such content, including plans for violence to disrupt the impending Presidential transition.

This echoes the argument that Prince made in 2019 in the context of Cloudflare’s decision to remove its (free) DDoS service from 8chan. Prince explained:

The ultimate responsibility for any piece of user-generated content that’s placed online is the user that generated it and then maybe the platform, and then maybe the host and then, eventually you get down to the network, which is where we are. What I think changed was, there were certain cases where there were certain platforms that were designed from the very beginning, to thwart all legal responsibility, all types of editorial. And so the individuals were bad people, their platforms themselves were bad people, the hosts were bad people. And when I say bad people, what I mean is, people who just ignore the rule of law…[So] every once in a while, there might be something that is so egregious and literally designed to be lawless, that it might fall on us.

In other words, infrastructure companies should defer to the services above them, but if no one else will act, they might have no choice; still, Prince added:

What was interesting was as we saw all of these other platforms struggle with this and I think Apple, AWS, and Google got a lot of attention, and it was interesting to see that same framework that we had set out, being put out. I’m not sure it’s exactly the same. Was Parler all the way to complete lawlessness, or were they just over their skis in terms of content moderation?

This is where I go back to Smith’s arguments about proactive engagement, and Kurian’s focus on process: if AWS felt Parler was unacceptable, it should have moved sooner, like Microsoft appears to have done with Gab several years ago. The seeming arbitrariness of the decision was directly related to the lack of proactive management on AWS’s part.

U.S. Corporate Responsibility

An argument I made about the actions of tech companies after January 6 is that they are best understood as a part of America’s checks-and-balances; corporations have a wide range of latitude in the U.S. — see the First Amendment, for example, or the ability to kick the President off of their platform — but as Spider-Man says, “With great power comes great responsibility”. To that end U.S. tech companies were doing their civic duty in ensuring an orderly transfer of power, even though it hurt their self-interest. I put this argument to Collison:

I think you’re getting at the idea that companies do have some kind of ultimate responsibility to society, and that that might occasionally lead to quite surprising actions, or even to actions that are inconsistent with their stated policies. I agree. It’s important to preserve the freedom of voluntary groups of private citizens to occasionally act as a check on even legitimate power. If some other company decided that the events of 1/6 simply crossed a subjective threshold and that they were going to withdraw service as a result, well, I think that’s an important right for them to hold, and I think that the aggregate effect of such determinations, prudently and independently applied, will ultimately be a more robust civic society.

Prince was more skeptical

That is a very charitable read of what went on…I think, the great heroic patriotic, “We did what was right for the country”, I mean, I would love that to be true, I’m not sure that if you actually dig into the reality of that, that it was that. As opposed to succumbing to the pressure of external, but more importantly, internal pressures.

Smith took the question in a different direction, arguing that tech company actions in the U.S. are increasingly guided by expectations from other countries:

I do think that there is, as embedded in the American business community, a sense of corporate social responsibility and I think that has grown over the last fifteen years. It’s not unique to the United States because I could argue that in certain areas, European business feels the same way. I would say that there are two factors that add to that sense of American corporate responsibility as it apply to technology and content moderation that are also important. One is, during the Trump administration, there was a heightened expectation by both tech employees and civil society in the United States that the tech sector needed to do more because government was likely to do less. And so I think that added to pressure as well as just a level of activity that grew over the course of those four years, that was also manifest on January 6th…

There is a second factor that I also think is relevant. There’s almost an arc of the world’s democracies that are creating common expectations through new laws outside the United States that I think are influencing then expectations for what tech companies will do inside the United States.

Kurian immediately jumped to the international implications as well:

Cloud is a global utility, so we’re making our technology available to customers in many, many countries, and in each country we have to comply with the sovereign law of that country. So it is a complex question because what is considered acceptable in one country may be considered non-acceptable in another country.

Your example of First Amendment in the United States and the way that other countries may perceive it gets complicated, and that’s one of the questions we’re working through as we speak, which we don’t have a clear answer on, which is what action do you take if it’s in violation of one country’s law when it is a direct contradiction in another country’s law? And for instance, because these are global platforms, if we say you’re not going to be allowed to operate in the United States, the same company could just as well use our regions in other countries to serve, do you see what I mean? There’s no easy answer on these things.

The Global Internet?

If there was universal agreement on the importance of understanding the different levels of the stack, the question of how to answer these questions globally provided the widest range of responses.

Collison argued that a bias towards neutrality was the only way a service could operate globally:

When it comes to moderation decisions and responsibilities as internet infrastructure, that pushes you to an approach of relative neutrality precisely so that you don’t supersede the various governmental and democratic oversight and civil society mechanisms that will (and should) be applied in different countries.

Kurian highlighted the technical challenges in any other approach:

We have tried to get to what’s common, and the reality is it’s super hard on a global basis to design software that behaves differently in different countries. It is super difficult. And at the scale at which we’re operating and the need for privacy, for example, it has to be software and systems that do the monitoring. You cannot assume that the way you’re going to enforce ToS and AUPs is by having humans monitor everything, I mean we have so many customers at such a large scale. And so that’s probably the most difficult thing is saying virtual machines behave one way in Canada, and a different way in the United States, and a third way, I mean that’s super complicated.

Smith made the same argument:

If you’re a global technology business, most of the time, it is far more efficient and legally compliant to operate a global model than to have different practices and standards in different countries, especially when you get to things that are so complicated. It’s very hard to have content moderators make decisions about individual pieces of content under one standard, but to try to do it and say, “Well, okay, we’ve evaluated this piece of content and it can stay up in the US but go down in France.” Then you add these additional layers of complexity that add both cost and the risk of non-compliance which creates reputational risk.

You can understand Google and Microsoft’s desire for consistency: what makes the public cloud so compelling is its immense scale, but you lose many of those benefits if you have to operate differently in every country. Prince, though, thinks that is inevitable:

I think that if you could say, German rules don’t extend beyond Germany and French rules don’t extend beyond France and Chinese rules don’t extend beyond China and that you have some human rights floor that’s in there.

But given the nature of the internet, isn’t that the whole problem? Because, anyone in Germany can go to any website outside of Germany.

That’s the way it used to be, I’m not sure that’s going to be the way it’s going to be in the future. Because, there’s a lot of atoms under all these bits and there’s an ISP somewhere, or there’s a network provider somewhere that’s controlling how that flows and so I think that, that we have to follow the law in all the places that are around the world and then we have to hold governments responsible to the rule of law, which is transparency, consistency, accountability. And so, it’s not okay to just say something disappears from the internet, but it is okay to say due to German law it disappeared from the internet. And if you don’t like it, here’s who you complain to, or here’s who you kick out of office so you do whatever you do. And if we can hold that, we can let every country have their own rules inside of that, I think that’s what keeps us from slipping to the lowest common denominator

Prince’s final conclusion is along the same lines of where I landed in Internet 3.0 and the Beginning of Tech History. To date the structure of the Internet has been governed by technological and especially economic incentives that drove towards centralization and Aggregation; after the events of January, though, political considerations will increasingly drive decision-making.

For many internet service providers this provides an opportunity to abstract away this complexity for other companies; Stripe, to take a pertinent example, adroitly handles different payment methods and tax regimes with a single API. The challenge is more profound for the public clouds, though, which achieve their advantage not by abstracting away complexity, but by leveraging the delivery of universal primitives at scale.

This is why I take Smith’s comments as more of a warning: a commitment to consistency may lead to the lowest common denominator outcome Prince fears, where U.S. social media companies overreach on content, even as competition is squeezed out at the infrastructure level by policies guided by non-U.S. countries. It’s a bad mix, and public clouds in particular would be better off preparing for geographically-distinct policies in the long run, even as they deliver on their commitment to predictability and process in the meantime, with a strong bias towards being hands-off. That will mean some difficult decisions, which is why it’s better to make a commitment to neutrality and due process now.

You can read the interviews from which this Article was drawn here.

</div>文章版权归原作者所有。