AI Homework

AI Homework ——

It happened to be Wednesday night when my daughter, in the midst of preparing for “The Trial of Napoleon” for her European history class, asked for help in her role as Thomas Hobbes, witness for the defense. I put the question to ChatGPT, which had just been announced by OpenAI a few hours earlier:

This is a confident answer, complete with supporting evidence and a citation to Hobbes work, and it is completely wrong. Hobbes was a proponent of absolutism, the belief that the only workable alternative to anarchy — the natural state of human affairs — was to vest absolute power in a monarch; checks and balances was the argument put forth by Hobbes’ younger contemporary John Locke, who believed that power should be split between an executive and legislative branch. James Madison, while writing the U.S. Constitution, adopted an evolved proposal from Charles Montesquieu that added a judicial branch as a check on the other two.

The ChatGPT Product

It was dumb luck that my first ChatGPT query ended up being something the service got wrong, but you can see how it might have happened: Hobbes and Locke are almost always mentioned together, so Locke’s articulation of the importance of the separation of powers is likely adjacent to mentions of Hobbes and Leviathan in the homework assignments you can find scattered across the Internet. Those assignments — by virtue of being on the Internet — are probably some of the grist of the GPT-3 language model that undergirds ChatGPT; ChatGPT applies a layer of Reinforcement Learning from Human Feedback (RLHF) to create a new model that is presented in an intuitive chat interface with some degree of memory (which is achieved by resending previous chat interactions along with the new prompt).

What has been fascinating to watch over the weekend is how those refinements have led to an explosion of interest in OpenAI’s capabilities and a burgeoning awareness of AI’s impending impact on society, despite the fact that the underlying model is the two-year old GPT-3. The critical factor is, I suspect, that ChatGPT is easy to use, and it’s free: it is one thing to read examples of AI output, like we saw when GPT-3 was first released; it’s another to generate those outputs yourself; indeed, there was a similar explosion of interest and awareness when Midjourney made AI-generated art easy and free (and that interest has taken another leap this week with an update to Lensa AI to include Stable Diffusion-driven magic avatars).

More broadly, this is a concrete example of the point former GitHub CEO Nat Friedman made to me in a Stratechery interview about the paucity of real-world AI applications beyond Github Copilot:

I left GitHub thinking, “Well, the AI revolution’s here and there’s now going to be an immediate wave of other people tinkering with these models and developing products”, and then there kind of wasn’t and I thought that was really surprising. So the situation that we’re in now is the researchers have just raced ahead and they’ve delivered this bounty of new capabilities to the world in an accelerating way, they’re doing it every day. So we now have this capability overhang that’s just hanging out over the world and, bizarrely, entrepreneurs and product people have only just begun to digest these new capabilities and to ask the question, “What’s the product you can now build that you couldn’t build before that people really want to use?” I think we actually have a shortage.

Interestingly, I think one of the reasons for this is because people are mimicking OpenAI, which is somewhere between the startup and a research lab. So there’s been a generation of these AI startups that style themselves like research labs where the currency of status and prestige is publishing and citations, not customers and products. We’re just trying to, I think, tell the story and encourage other people who are interested in doing this to build these AI products, because we think it’ll actually feed back to the research world in a useful way.

OpenAI has an API that startups could build products on; a fundamental limiting factor, though, is cost: generating around 750 words using Davinci, OpenAI’s most powerful language model, costs 2 cents; fine-tuning the model, with RLHF or anything else, costs a lot of money, and producing results from that fine-tuned model is 12 cents for ~750 words. Perhaps it is no surprise, then, that it was OpenAI itself that came out with the first widely accessible and free (for now) product using its latest technology; the company is certainly getting a lot of feedback for its research!

ChatGPT launched on wednesday. today it crossed 1 million users!

— Sam Altman (@sama) December 5, 2022

</div>

OpenAI has been the clear leader in terms of offering API access to AI capabilities; what is fascinating is about ChatGPT is that it establishes OpenAI as a leader in terms of consumer AI products as well, along with MidJourney. The latter has monetized consumers directly, via subscriptions; it’s a business model that makes sense for something that has marginal costs in terms of GPU time, even if it limits exploration and discovery. That is where advertising has always shined: of course you need a good product to drive consumer usage, but being free is a major factor as well, and text generation may end up being a better match for advertising, given its utility — and thus opportunity to collect first party data — is likely going to be higher than image generation for most people.

Deterministic vs. Probabilistic

It is an open question as to what jobs will be the first to be disrupted by AI; what became obvious to a bunch of folks this weekend, though, is that there is one universal activity that is under serious threat: homework.

Go back to the example of my daughter I noted above: who hasn’t had to write an essay about a political philosophy, or a book report, or any number of topics that are, for the student assigned to write said paper theoretically new, but in terms of the world generally simply a regurgitation of what has been written a million times before. Now, though, you can write something “original” from the regurgitation, and, for at least the next few months, you can do it for free.

The obvious analogy to what ChatGPT means for homework is the calculator: instead of doing tedious math calculations students could simply punch in the relevant numbers and get the right answer, every time; teachers adjusted by making students show their work.

That there, though, also shows why AI-generated text is something completely different; calculators are deterministic devices: if you calculate 4,839 + 3,948 - 45 you get 8,742, every time. That’s also why it is a sufficient remedy for teachers to requires students show their work: there is one path to the right answer and demonstrating the ability to walk down that path is more important than getting the final result.

AI output, on the other hand, is probabilistic: ChatGPT doesn’t have any internal record of right and wrong, but rather a statistical model about what bits of language go together under different contexts. The base of that context is the overall corpus of data that GPT-3 is trained on, along with additional context from ChatGPT’s RLHF training, as well as the prompt and previous conversations, and, soon enough, feedback from this week’s release. This can result in some truly mind-blowing results, like this Virtual Machine inside ChatGPT:

Did you know, that you can run a whole virtual machine inside of ChatGPT?

Great, so with this clever prompt, we find ourselves inside the root directory of a Linux machine. I wonder what kind of things we can find here. Let’s check the contents of our home directory.

Hmmm, that is a bare-bones setup. Let’s create a file here.

All the classic jokes ChatGPT loves. Let’s take a look at this file.

So, ChatGPT seems to understand how filesystems work, how files are stored and can be retrieved later. It understands that linux machines are stateful, and correctly retrieves this information and displays it.

What else do we use computers for. Programming!

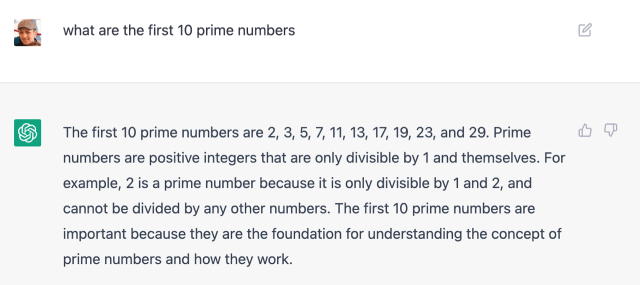

That is correct! How about computing the first 10 prime numbers:

That is correct too!

I want to note here that this codegolf python implementation to find prime numbers is very inefficient. It takes 30 seconds to evaluate the command on my machine, but it only takes about 10 seconds to run the same command on ChatGPT. So, for some applications, this virtual machine is already faster than my laptop.

The difference is that ChatGPT is not actually running python and determining the first 10 prime numbers deterministically: every answer is a probabilistic result gleaned from the corpus of Internet data that makes up GPT-3; in other words, ChatGPT comes up with its best guess as to the result in 10 seconds, and that guess is so likely to be right that it feels like it is an actual computer executing the code in question.

This raises fascinating philosophical questions about the nature of knowledge; you can also simply ask ChatGPT for the first 10 prime numbers:

Those weren’t calculated, they were simply known; they were known, though, because they were written down somewhere on the Internet. In contrast, notice how ChatGPT messes up the far simpler equation I mentioned above:

For what it’s worth, I had to work a little harder to make ChatGPT fail at math: the base GPT-3 model gets basic three digit addition wrong most of the time, while ChatGPT does much better. Still, this obviously isn’t a calculator: it’s a pattern matcher — and sometimes the pattern gets screwy. The skill here is in catching it when it gets it wrong, whether that be with basic math or with basic political theory.

Interrogating vs. Editing

There is one site already on the front-lines in dealing with the impact of ChatGPT: Stack Overflow. Stack Overflow is a site where developers can ask questions about their code or get help in dealing with various development issues; the answers are often code themselves. I suspect this makes Stack Overflow a goldmine for GPT’s models: there is a description of the problem, and adjacent to it code that addresses that problem. The issue, though, is that the correct code comes from experienced developers answering questions and having those questions upvoted by other developers; what happens if ChatGPT starts being used to answer questions?

It appears it’s a big problem; from Stack Overflow Meta:

Use of ChatGPT generated text for posts on Stack Overflow is temporarily banned.

This is a temporary policy intended to slow down the influx of answers created with ChatGPT. What the final policy will be regarding the use of this and other similar tools is something that will need to be discussed with Stack Overflow staff and, quite likely, here on Meta Stack Overflow.

Overall, because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking or looking for correct answers.

The primary problem is that while the answers which ChatGPT produces have a high rate of being incorrect, they typically look like they might be good and the answers are very easy to produce. There are also many people trying out ChatGPT to create answers, without the expertise or willingness to verify that the answer is correct prior to posting. Because such answers are so easy to produce, a large number of people are posting a lot of answers. The volume of these answers (thousands) and the fact that the answers often require a detailed read by someone with at least some subject matter expertise in order to determine that the answer is actually bad has effectively swamped our volunteer-based quality curation infrastructure.

As such, we need the volume of these posts to reduce and we need to be able to deal with the ones which are posted quickly, which means dealing with users, rather than individual posts. So, for now, the use of ChatGPT to create posts here on Stack Overflow is not permitted. If a user is believed to have used ChatGPT after this temporary policy is posted, sanctions will be imposed to prevent users from continuing to post such content, even if the posts would otherwise be acceptable.

There are a few fascinating threads to pull on here. One is about the marginal cost of producing content: Stack Overflow is about user-generated content; that means it gets its content for free because its users generate it for help, generosity, status, etc. This is uniquely enabled by the Internet.

AI-generated content is a step beyond that: it does, especially for now, cost money (OpenAI is bearing these costs for now, and they’re | substantial), but in the very long run you can imagine a world where content generation is free not only from the perspective of the platform, but also in terms of user’s time; imagine starting a new forum or chat group, for example, with an AI that instantly provides “chat liquidity”.

For now, though, probabilistic AI’s seem to be on the wrong side of the Stack Overflow interaction model: whereas deterministic computing like that represented by a calculator provides an answer you can trust, the best use of AI today — and, as Noah Smith and roon argue, the future — is providing a starting point you can correct:

What’s common to all of these visions is something we call the “sandwich” workflow. This is a three-step process. First, a human has a creative impulse, and gives the AI a prompt. The AI then generates a menu of options. The human then chooses an option, edits it, and adds any touches they like.

The sandwich workflow is very different from how people are used to working. There’s a natural worry that prompting and editing are inherently less creative and fun than generating ideas yourself, and that this will make jobs more rote and mechanical. Perhaps some of this is unavoidable, as when artisanal manufacturing gave way to mass production. The increased wealth that AI delivers to society should allow us to afford more leisure time for our creative hobbies…

We predict that lots of people will just change the way they think about individual creativity. Just as some modern sculptors use machine tools, and some modern artists use 3d rendering software, we think that some of the creators of the future will learn to see generative AI as just another tool – something that enhances creativity by freeing up human beings to think about different aspects of the creation.

In other words, the role of the human in terms of AI is not to be the interrogator, but rather the editor.

Zero Trust Homework

Here’s an example of what homework might look like under this new paradigm. Imagine that a school acquires an AI software suite that students are expected to use for their answers about Hobbes or anything else; every answer that is generated is recorded so that teachers can instantly ascertain that students didn’t use a different system. Moreover, instead of futilely demanding that students write essays themselves, teachers insist on AI. Here’s the thing, though: the system will frequently give the wrong answers (and not just on accident — wrong answers will be often pushed out on purpose); the real skill in the homework assignment will be in verifying the answers the system churns out — learning how to be a verifier and an editor, instead of a regurgitator.

What is compelling about this new skillset is that it isn’t simply a capability that will be increasingly important in an AI-dominated world: it’s a skillset that is incredibly valuable today. After all, it is not as if the Internet is, as long as the content is generated by humans and not AI, “right”; indeed, one analogy for ChatGPT’s output is that sort of poster we are all familiar with who asserts things authoritatively regardless of whether or not they are true. Verifying and editing is an essential skillset right now for every individual.

It’s also the only systematic response to Internet misinformation that is compatible with a free society. Shortly after the onset of COVID I wrote Zero Trust Information that made the case that the only solution to misinformation was to adopt the same paradigm behind Zero Trust Networking:

The answer is to not even try: instead of trying to put everything inside of a castle, put everything in the castle outside the moat, and assume that everyone is a threat. Thus the name: zero-trust networking.

In this model trust is at the level of the verified individual: access (usually) depends on multi-factor authentication (such as a password and a trusted device, or temporary code), and even once authenticated an individual only has access to granularly-defined resources or applications…In short, zero trust computing starts with Internet assumptions: everyone and everything is connected, both good and bad, and leverages the power of zero transaction costs to make continuous access decisions at a far more distributed and granular level than would ever be possible when it comes to physical security, rendering the fundamental contradiction at the core of castle-and-moat security moot.

I argued that young people were already adapting to this new paradigm in terms of misinformation:

To that end, instead of trying to fight the Internet — to try and build a castle and moat around information, with all of the impossible tradeoffs that result — how much more value might there be in embracing the deluge? All available evidence is that young people in particular are figuring out the importance of individual verification; for example, this study from the Reuters Institute at Oxford:

We didn’t find, in our interviews, quite the crisis of trust in the media that we often hear about among young people. There is a general disbelief at some of the politicised opinion thrown around, but there is also a lot of appreciation of the quality of some of the individuals’ favoured brands. Fake news itself is seen as more of a nuisance than a democratic meltdown, especially given that the perceived scale of the problem is relatively small compared with the public attention it seems to receive. Users therefore feel capable of taking these issues into their own hands.

A previous study by Reuters Institute also found that social media exposed more viewpoints relative to offline news consumption, and another study suggested that political polarization was greatest amongst older people who used the Internet the least.

Again, this is not to say that everything is fine, either in terms of the coronavirus in the short term or social media and unmediated information in the medium term. There is, though, reason for optimism, and a belief that things will get better, the more quickly we embrace the idea that fewer gatekeepers and more information means innovation and good ideas in proportion to the flood of misinformation which people who grew up with the Internet are already learning to ignore.

The biggest mistake in that article was the assumption that the distribution of information is a normal one; in fact, as I noted in Defining Information, there is a lot more bad information for the simple reason that it is cheaper to generate. Now the deluge of information is going to become even greater thanks to AI, and while it will often be true, it will sometimes be wrong, and it will be important for individuals to figure out which is which.

The solution will be to start with Internet assumptions, which means abundance, and choosing Locke and Montesquieu over Hobbes: instead of insisting on top-down control of information, embrace abundance, and entrust individuals to figure it out. In the case of AI, don’t ban it for students — or anyone else for that matter; leverage it to create an educational model that starts with the assumption that content is free and the real skill is editing it into something true or beautiful; only then will it be valuable and reliable.

</div>文章版权归原作者所有。