China Chips and Moore’s Law

China Chips and Moore’s Law ——

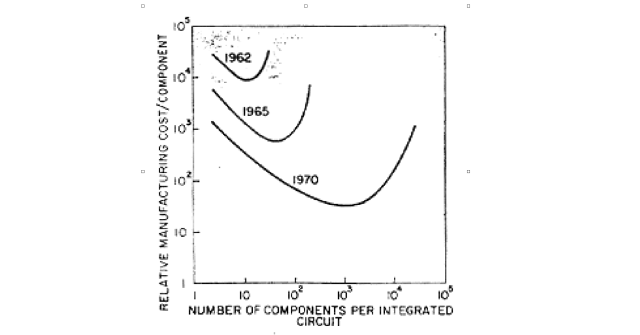

— Gordon Moore, Cramming More Components Onto Integrated Circuits

– Jensen Huang

On Tuesday the Biden administration tightened export controls for advanced AI chips being sold to China; the primary target was Nvidia’s H800 and A800 chips, which were specifically designed to skirt controls put in place last year. The primary difference between the H800/A800 and H100/A100 is the bandwidth of their interconnects: the A100 had 600 Gb/s interconnects (the H100 has 900GB/s), which just so happened to be the limit proscribed by last year’s export controls; the A800 and H800 were limited to 400 Gb/s interconnects.

The reason why interconnect speed matters is tied up with Nvidia CEO Jensen Huang’s thesis that Moore’s Law is dead. Moore’s Law, as originally stated in 1965, states that the number of transistors in an integrated circuit would double every year. Moore revised his prediction 10 years later to be a doubling every two years, which held until the last decade or so, when it has slowed to a doubling about every three years.

In practice, though, Moore’s Law has become something more akin to a fundamental precept underlying the tech industry: computing power will both increase and get cheaper over time. This precept — which I will call Moore’s Precept, for clarity — is connected to Moore’s technical prediction: smaller transistors can switch faster, and use less energy in the switching, even as more of them fit on a single wafer; this means that you can either get more chips per wafer or larger chips, either decreasing price or increasing power for the same price. In practice we got both.

What is critical is that the rest of the tech industry didn’t need to understand the technical or economic details of Moore’s Law: for 60 years it has been safe to simply assume that computers would get faster, which meant the optimal approach was always to build for the cutting edge or just beyond, and trust that processor speed would catch up to your use case. From an analyst perspective, it is Moore’s Precept that enables me to write speculative articles like AI, Hardware, and Virtual Reality: it is enough to see that a use case is possible, if not yet optimal; Moore’s Precept will provide the optimization.

The End of Moore’s Precept?

This distinction between Moore’s Law and Moore’s Precept is the key to understanding Nvidia CEO Jensen Huang’s repeated declarations that Moore’s Law is dead. From a technical perspective, it has certainly slowed, but density continues to increase; here is TSMC’s transistor density by node size, using the first (i.e. worse) iteration of each node size:1

| TSMC | Transistor Density (MTr/mm) | Year Introduced |

|---|---|---|

| 90 nm | 3.4 | 2004 |

| 65 nm | 5.6 | 2006 |

| 40 nm | 9.8 | 2008 |

| 28 nm | 16.6 | 2011 |

| 20 nm | 20.9 | 2014 |

| 16 nm | 28.9 | 2015 |

| 10 nm | 52.5 | 2017 |

| 7 nm | 91.2 | 2019 |

| 5 nm | 138.2 | 2020 |

Remember, though, that cost matters; here is the same table with TSMC’s introductory price/wafer, and what that translates to in terms of price/billion transistors:

| TSMC | MTr/mm | Year Introduced | Price/Wafer | Price/BTr |

|---|---|---|---|---|

| 90 nm | 3.4 | 2004 | $1,650 | $6.87 |

| 65 nm | 5.6 | 2006 | $1,937 | $4.89 |

| 40 nm | 9.8 | 2008 | $2,274 | $3.28 |

| 28 nm | 16.6 | 2011 | $2,891 | $2.46 |

| 20 nm | 20.9 | 2014 | $3,677 | $2.49 |

| 16 nm | 28.9 | 2015 | $3,984 | $1.95 |

| 10 nm | 52.5 | 2017 | $5,992 | $1.61 |

| 7 nm | 91.2 | 2019 | $9,346 | $1.45 |

| 5 nm | 138.2 | 2020 | $16,988 | $1.74 |

Notice that number on the bottom right: with TSMC’s 5 nm process the price per transistor increased — and it increased a lot (20%). The reason was obvious: 5 nm was the first process that required ASML’s extreme ultraviolet (EUV) lithography, and EUV machines were hugely expensive — around $150 million each.2 In other words, it appeared that while the technical definition of Moore’s Law would continue, the precept that chips would always get both faster and cheaper would not.

GPUs and Embarrassing Parallelism

Huang’s argument, to be clear, does not simply rest on the cost of 5 nm chips; remember Moore’s Precept is about speed as well as cost, and the truth is that a lot of those density gains have primarily gone towards power efficiency as energy became a constraint in everything from mobile to PCs to data centers. Huang’s thesis for several years now is that Nvidia has the solution to making computing faster: use GPUs.

GPUs are much less complex than CPUs; that means they can execute instructions much more quickly, but those instructions have to be much simpler. At the same time, you can run a lot of them at the same time to achieve outsized results. Graphics is, unsurprisingly, the most obvious example: every “shader” — the primary processing component of a GPU — calculates what will be displayed on a single portion of the screen; the size of the portion is a function of how many shaders you have available. If you have 1,024 shaders, each shader draws 1/1,024 of the screen. Ergo, if you have 2,048 shaders, you can draw the screen twice as fast. Graphics performance is “embarrassingly parallel”, which is to say it scales with the number of processors you apply to the problem.

This “embarrassing parallelism” is the key to GPUs outsized performance relative to CPUs, but the challenge is that not all software problems are easily parallel-izable; Nvida’s CUDA ecosystem is predicated on providing the tools to build software applications that can leverage GPU parallelism, and is one of the major moats undergirding Nvidia’s dominance, but most software applications still need the complexity of CPUs to run.

AI, though, is not most software. It turns out that AI, both in terms of training models and in leveraging them (i.e. inference) is an embarrassingly parallel application. Moreover, the optimum amount of scalability goes far beyond a computer monitor displaying graphics; this is why Nvidia AI chips feature the high-speed interconnects referenced by the chip ban: AI applications run across multiple AI chips at the same time, but the key to making sure those GPUs are busy is feeding them with data, and that requires those high speed interconnects.

That noted, I’m skeptical about the wholesale shift of traditional data center applications to GPUs; from Nvidia On the Mountaintop:

Humans — and companies — are lazy, and not only are CPU-based applications easier to develop, they are also mostly already built. I have a hard time seeing what companies are going to go through the time and effort to port things that already run on CPUs to GPUs; at the end of the day, the applications that run in a cloud are determined by customers who provide the demand for cloud resources, not cloud providers looking to optimize FLOP/rack.

There’s another reason to think that traditional CPUs still have some life in them as well: it turns out that Moore’s Precept may be back on track.

EUV and Moore’s Precept

The table I posted above only ran through 5 nm; the iPhone 15 Pro, though, has an N3 chip, and check out the price/transistor:

| TSMC | MTr/mm | Year Introduced | Price/Wafer | Price/BTr |

|---|---|---|---|---|

| 90 nm | 3.4 | 2004 | $1,650 | $6.87 |

| 65 nm | 5.6 | 2006 | $1,937 | $4.89 |

| 40 nm | 9.8 | 2008 | $2,274 | $3.28 |

| 28 nm | 16.6 | 2011 | $2,891 | $2.46 |

| 20 nm | 20.9 | 2014 | $3,677 | $2.49 |

| 16 nm | 28.9 | 2015 | $3,984 | $1.95 |

| 10 nm | 52.5 | 2017 | $5,992 | $1.61 |

| 7 nm | 91.2 | 2019 | $9,346 | $1.45 |

| 5 nm | 138.2 | 2020 | $16,988 | $1.74 |

| 3 nm (N3B) | 197.0 | 2023 | $20,000 | $1.44 |

| 3 nm (N3E) | 215.6 | 2023 | $20,000 | $1.31 |

While I only included the first version of each node previously, the N3B process, which is used for the iPhone’s A17 Pro chip, is a dead-end; TSMC changed its approach with the N3E, which will be the basis of the N3 family going forward. It also makes the N3 leap even more impressive in terms of price/transistor: while N3B undid the 5 nm backslide, N3E is a marked improvement over 7 nm.

Moreover, the gains are actually what you would expect: yes, those EUV machines cost a lot, but the price decreases embedded in Moore’s Precept are not a function of equipment getting cheaper — notice that the price/wafer has been increasing continuously. Rather, ever declining prices/transistor are a function of Moore’s Law, which is to say that new equipment, like EUV, lets us “Cram[] More Components Onto Integrated Circuits”.

What happened at 5 nm was similar to what happened at 20 nm, the last time the price/transistor increased: that was the node where TSMC started to use double-patterning, which means they had to do every lithography step twice; that both doubled the utilization of lithography equipment per wafer and also decreased yield. For that node, at least, the gains from making smaller transistors were outweighed by the costs. A year later, though, and TSMC launched the 16 nm node that re-united Moore’s Law with Moore’s Precept. That is exactly what seems to have happened with 3 nm — the gains of EUV are now significantly outweighing the costs — and early rumors about 2 nm density and price points suggests the gains should continue for another node.

Chip Ban Angst

All of this is interesting in its own right, but it’s particularly pertinent in light of the recent angst in Washington DC over Huawei’s recent smartphone with a 7 nm chip, seemingly in defiance of those export controls. I already explained why that angst was misguided in this September Update. To summarize my argument:

- TSMC had already shown that 7 nm chips could be made using deep ultraviolet (DUV)-based immersion lithography, and China had plenty of DUV lithography machines, given that DUV has been the standard for multiple generations of chips.

- China’s Semiconductor Manufacturing International Corp. (SMIC) had already made a 7 nm chip in 2022; sure it was simpler than the one launched in that Huawei phone, but that is the exact sort of progression you should expect from a competent foundry.

- SMIC is almost certainly not producing that 7nm chip economically; Intel, for example, could make a 7nm chip using DUV, they just couldn’t do it economically, which is why they ultimately switched to EUV.

In short, the problem with the chip ban was drawing the line at 10 nm: that line was arbitrary given that the equipment needed to make 10 nm chips had already been shown to be capable of producing 7 nm chips; that SMIC managed to do just that isn’t a surprise, and, crucially, is not evidence that the chip ban was a failure.

The line that actually matters is 5 nm, which is another way to say that the export control that will actually limit China’s long-term development is EUV. Fortunately the Trump administration had already persuaded the Netherlands to not allow the export of EUV machines, which the Biden administration further locked down with its chip ban and further coordination with the Netherlands. The reality is that a lot of chip-making equipment is “multi-nodal”; much of the machinery can be used at multiple nodes, but you must have EUV machines to realize Moore’s Precept, because it is the key piece of technology driving Moore’s Law.

By the same token, the A800/H800 loophole was a real one: the H800 is made on TSMC’s third-generation 5 nm process (confusingly called N4), which is to say it is made with EUV; the interconnect limits were meaningful, and would make AI development slower and more costly (because those GPUs would be starved of data more of the time), but it didn’t halt it. This matters because AI is the military application the U.S. should be the most concerned with: a lot of military applications run perfectly fine on existing chips (or even, in the case of guided weaponry, chips that were made decades ago); wars of the future, though, will almost certainly be undergirded by AI, a field that is only just now getting started.

This leads to a further point: the payoff from this chip ban will not come immediately. The only way the entire idea makes sense is if Moore’s Law continues to exist, because that means the chips that will be available in five or ten years will be that much faster and cheaper than the ones that exist today, increasing the gap. And, at the same time, the idea also depends on taking Huang’s argument seriously, because AI needs not just power but scale. Fortunately movement on both fronts is headed in the right direction.

There remain good arguments against the entire concept of the chip ban, including the obvious fact that China is heavily incentivized to built up replacements from scratch (and could have leverage on the U.S. on the trailing edge): perhaps in 20 years the U.S. will not only have lost its most potent point of leverage but will also see its most cutting edge companies undercut by Chinese competition. That die, though, has long since been cast; the results that matter are not a smartphone in 2023, but the capabilities of 2030 and beyond.

-

I am not certain I have the exact right numbers for older nodes, but I have confirmed that the numbers are in the right ballpark ↩

-

TSMC first used EUV with latter iterations of its 7nm process, but that was primarily to move down the learning curve; EUV was not strictly necessary, and the original 7nm process used immersion DUV lithography exclusively ↩

文章版权归原作者所有。